Revisit the CDAC Winter 2021 Distinguished Speaker Series

Bias Correction: Solutions for Socially Responsible Data Science

Security, privacy and bias in the context of machine learning are often treated as binary issues, where an algorithm is either biased or fair, ethical or unjust. In reality, there is a tradeoff between using technology and opening up new privacy and security risks. Researchers are developing innovative tools that navigate these tradeoffs by applying advances in machine learning to societal issues without exacerbating bias or endangering privacy and security. The CDAC Winter 2021 Distinguished Speaker Series hosted interdisciplinary researchers and thinkers exploring methods and applications that protect user privacy, prevent malicious use, and avoid deepening societal inequities — while diving into the human values and decisions that underpin these approaches.

Watch a playlist of the talks below:

Speakers:

January 25: Olga Russakovsky, Assistant Professor, Princeton University & Co-Founder, AI4All

February 1: Mukund Sundararajan, Principal Research Scientist/Director, Google (co-presented with the Center for Applied AI at the Booth School of Business)

February 8: Brian Christian, Journalist/Author (co-presented with the Mansueto Institute for Urban Innovation)

February 16: Andrea G. Parker, Associate Professor, School of Interactive Computing, Georgia Tech

February 22: Timnit Gebru, Ethical AI Researcher

March 1: Deirdre Mulligan, Professor & Director of Berkeley Center for Law & Technology, UC Berkeley

March 15: Kate Crawford, Visiting Chair of AI and Justice, École Normale Supérieure

Community Data Fellow Stephania Tello Zamudio helps broaden internet access for Illinois residents

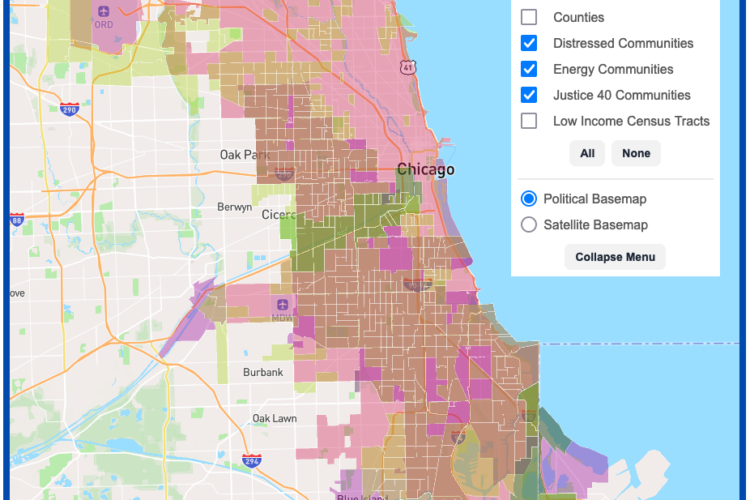

DSI Software Engineers create interactive map tool to maximize climate investment tax benefits

Transform cohort 3 participant Healee uses AI to improve healthcare