Nick Feamster Discusses Facebook Content Moderation on Chicago Tonight

Last week, Facebook founder Mark Zuckerberg faced tough questions from Congress about its policies on moderating content posted by its users, with some observers advocating for government regulations on social media platforms.

Professor Nick Feamster joined WTTW’s Chicago Tonight to talk about the issue and the technical challenges for companies like Facebook and Twitter in monitoring and removing offensive or misleading content.

“These social media platforms are processing hundreds of millions of posts per day,” Feamster said. “If you have regulations put in place that basically require the platforms to moderate the content, once you start making these asks you are basically saying that the approach must be automated. No human can look at all of this content – not even the tens of thousands of content moderators that Facebook has hired could possibly look at all of it – particularly within a short time frame.”

And, Feamster says, while some people may be unhappy with Facebook for not doing enough, they are making more of an effort than other major platforms. Twitter and YouTube, he says, are doing “basically nothing” to vet content.

Feamster also talks about research from his group that placed political advertisements on social media platforms in order to assess difficulties in detecting this content. You can watch the full segment below:

Community Data Fellow Stephania Tello Zamudio helps broaden internet access for Illinois residents

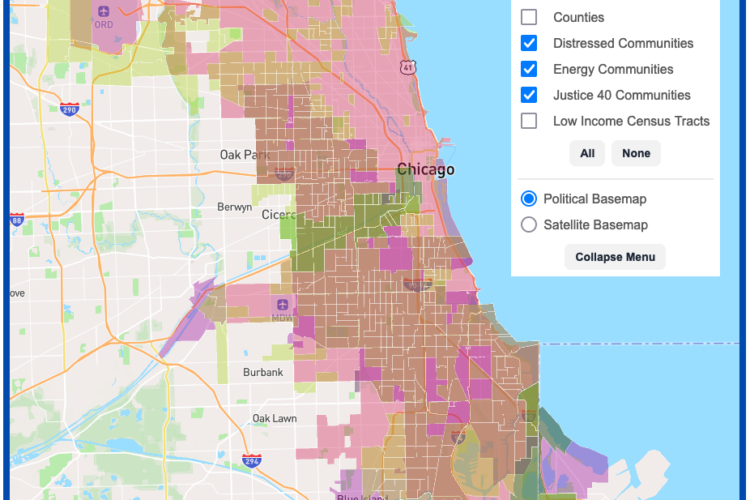

DSI Software Engineers create interactive map tool to maximize climate investment tax benefits

Incoming UChicago Data Science and Computer Science Associate Professor raises additional $106 million in new funding round for company, Together AI