2022 Rising Stars

Autumn 2022 Cohort

-

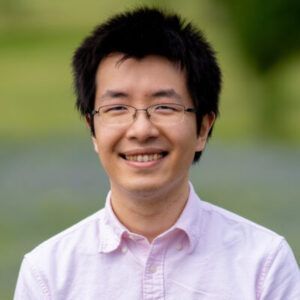

Adityanarayanan Radhakrishnan

PhD Candidate, Massachusetts Institute of Technology -

Amanda Coston

PhD Candidate, Carnegie Mellon University -

Anushri Dixit

PhD Candidate, California Institute of Technology -

Blair Bilodeau

PhD Candidate, University of Toronto -

Chan Young Park

PhD Candidate, Carnegie Mellon University -

Chao Ma

Szego Assistant Professor, Stanford University -

Eamon Duede

PhD Candidate, University of Chicago -

Gemma Moran

Postdoc, Columbia University -

Harlin Lee

Hedrick Assistant Adjunct Professor (Postdoc), University of California, Los Angeles -

Helen Qu

PhD Candidate, University of Pennsylvania -

Jian Kang

PhD Candidate, University of Illinois Urbana-Champaign -

Jingyan Wang

Postdoctoral Fellow, Georgia Institute of Technology -

Jonatas Marques

Research Assistant, Federal University of Rio Grande do Sul (Brazil) -

Laixi Shi

PhD Candidate, Carnegie Mellon University -

Linyi Li

PhD Candidate, University of Illinois Urbana-Champaign -

Lu Gan

Postdoctoral Scholar, California Institute of Technology -

Nwamaka Okafor

PhD Student, University College Dublin -

Oscar Leong

von Karman Instructor, California Institute of Technology -

Ruishan Liu

Postdoctoral Scholar, Stanford University -

Ryan Shi

PhD Candidate, Carnegie Mellon University -

Tian Li

PhD Candidate, Carnegie Mellon University -

Valentin Hofmann

PhD Student, University of Oxford -

Vishwali Mhasawade

PhD Candidate, New York University -

Wonjun Lee

Postdoctoral Fellow, University of Minnesota, Twin Cities -

Xintong Wang

Postdoctoral Fellow, Harvard University -

Yandi Shen

Postdoc, The University of Chicago -

Yi Wu

PhD Student, University of Toronto -

Yiping Lu

PhD Student, Stanford University -

Yiqun Chen

Postdoctoral Fellow, Stanford University -

Yuntian Deng

PhD Student, Harvard University -

Zhimei Ren

Postdoc, The University of Chicago -

Zichao Wang

PhD Student, Rice University

Bio: Adityanarayanan (Adit) Radhakrishnan is a Ph.D. candidate in the Electrical Engineering and Computer Science (EECS) department at MIT advised by Caroline Uhler. He is also a Ph.D. fellow at the Eric and Wendy Schmidt Center of the Broad Institute of Harvard and MIT. Prior to his Ph.D., Adit completed his Masters in EECS and Bachelors in EECS and mathematics at MIT. Adit’s research focuses on advancing theoretical understanding of over-parameterized neural networks in order to develop simple and effective methods for applications in healthcare and computational biology.

Talk Title: Wide and Deep Networks Achieve Consistency in Classification

Talk Abstract: While neural networks are used for classification tasks across domains, a long-standing open problem in machine learning is determining whether neural networks trained using standard procedures are consistent for classification, i.e., whether such models minimize the probability of misclassification for arbitrary data distributions. In this work, we identify and construct an explicit set of neural network classifiers that are consistent. Since effective neural networks in practice are typically both wide and deep, we analyze infinitely wide networks that are also infinitely deep. In particular, using the recent connection between infinitely wide neural networks and Neural Tangent Kernels, we provide explicit activation functions that can be used to construct networks that achieve consistency. Interestingly, these activation functions are simple and easy to implement, yet differ from commonly used activations such as ReLU or sigmoid. More generally, we create a taxonomy of infinitely wide and deep networks and show that these models implement one of three well-known classifiers depending on the activation function used: (1) 1-nearest neighbor (model predictions are given by the label of the nearest training example); (2) majority vote (model predictions are given by the label of the class with greatest representation in the training set); or (3) singular kernel classifiers (a set of classifiers containing those that achieve consistency). Our results highlight the benefit of using deep networks for classification tasks, in contrast to regression tasks, where excessive depth is harmful.

Bio: Amanda Coston is a PhD student in Machine Learning and Public Policy at Carnegie Mellon University. Her research investigates how to make algorithmic decision-making more reliable and more equitable using causal inference and machine learning. She is advised by Alexandra Chouldechova and Edward H. Kennedy. She completed a B.S.E from Princeton where she was advised by Robert Schapire. Prior to her PhD, she worked at Microsoft, the consultancy Teneo, and the Nairobi-based startup HiviSasa. She is a Meta Research PhD Fellow (2022), K & L Gates Presidential Fellow in Ethics and Computational Technologies (2020), and NSF GRFP Fellow (2019).

Talk Title: Validity, equity, and oversight in societally consequential machine learning

Talk Abstract: Machine learning algorithms are widely used for decision-making in societally high-stakes settings from child welfare and criminal justice to healthcare and consumer lending. Recent history has illuminated numerous examples where these algorithms proved unreliable or inequitable. We take a principled approach to the use of machine learning in societally high-stakes settings, guided by three pillars: validity, equity, and oversight. We address data problems that challenge the validity of algorithmic decision support systems by developing methods for algorithmic risk assessments that account for selection bias, confounding, and bandit feedback. We conduct audits for bias throughout the systems in which algorithms are used to inform decision-making. Throughout we propose novel methods that use doubly-robust techniques for bias correction. We apply our methods to the consumer lending context where we find that use of our methods could extend lending opportunities to applicants from historically underbanked regions without an increase in the average rate of default. We present additional findings in the child welfare, criminal justice and healthcare settings.

Bio: Anushri Dixit is a Ph.D. candidate at California Institute of Technology in Control and Dynamical Systems and is advised by Prof. Joel Burdick. Her research focuses on motion planning and control of robots in extreme terrain while accounting for uncertainty in a principled manner. Her work on risk-aware methodologies for planning has been deployed on various robotic platforms as a part of the Team CoSTAR’s effort in the DARPA Subterranean Challenge. She has received the DE Shaw Zenith Fellowship and was selected as a rising star at the Southern California Robotics Symposium. Prior to her Ph.D., she earned her B.S. in Electrical Engineering from Georgia Institute of Technology in 2017.

Talk Title: Towards Risk-Aware Robotic Autonomy in Extreme Environments: Robustness to Distribution Shifts in Control and Planning

Talk Abstract: Robots provide the crucial ability to replace humans in environments that are inaccessible due to environmental hazards such as search and rescue operations. They need to be able to reason about the risk in an environment that is perceptually degraded and complete tasks while maintaining safety. Providing safety and performance guarantees for motion planning and control algorithms is a well-studied problem for robotic systems with well-known dynamics that operate in structured environments. However, when robots operate in a real-world setting where the environment is dynamic and unstructured, common distributional assumptions used to develop the planning algorithms are no longer valid and consequently, the safety guarantees no longer hold. In this talk, I will focus on risk-aware methodologies for robotic autonomy in unstructured environments. I will provide data-driven motion planning techniques to account for uncertainty in dynamic environments and sample-based risk bounds to provide high-confidence verification statements for robotic systems. The goal of the talk is to understand how robots interpret the ambiguity in their environment, and the ways to generate policies that better account for this uncertainty.

Bio: Blair Bilodeau is a graduating PhD candidate in statistical sciences at the University of Toronto, advised by Daniel Roy. His PhD was funded by an NSERC Doctoral Canada Graduate Scholarship and the Vector Institute. Blair’s research area is broadly the theoretical foundations of statistical decision making, with applications to both statistical methodology and machine learning algorithms. His contributions span fundamental problems in inference and prediction, including: adaptive sequential decision making, minimax conditional density estimation, and numerical quadrature for statistical inference. Previously, Blair completed his BSc at Western University.

Talk Title: Adaptively Exploiting d-Separators with Causal Bandits

Talk Abstract: Multi-armed bandit problems provide a framework to identify the optimal intervention over a sequence of repeated experiments. Without additional assumptions, minimax optimal performance (measured by cumulative regret) is well-understood. With access to additional observed variables that d-separate the intervention from the outcome (i.e., they are a d- separator), recent “causal bandit” algorithms provably incur less regret. However, in practice it is desirable to be agnostic to whether observed variables are a d-separator. Ideally, an algorithm should be adaptive; that is, perform nearly as well as an algorithm with oracle knowledge of the presence or absence of a d-separator. In this work, we formalize and study this notion of adaptivity, and provide a novel algorithm that simultaneously achieves (a) optimal regret when a d-separator is observed, improving on classical minimax algorithms, and (b) significantly smaller regret than recent causal bandit algorithms when the observed variables are not a d-separator. Crucially, our algorithm does not require any oracle knowledge of whether a d-separator is observed. We also generalize this adaptivity to other conditions, such as the front-door criterion.

Bio: Chan Park is a 5-th year PhD student at Language Technologies Institute at Carnegie Mellon University. She is advised by Professor Yulia Tsvetkov, and is currently a visiting PhD student at University of Washington. She is a recipient of a KFAS Overseas PhD Scholarship. Her research interests lie in the intersection of natural language processing and computational social science and her work has been published to various top-tier conferences and journals including PNAS, EMNLP, ICWSM, and WWW. She has collaborated with the Washington Post and the Data for Black Lives organization to apply her research to address real-world problems.

Talk Title: Challenges in Opinion Manipulation Detection: An Examination of Wartime Russian Media

Talk Abstract: Information warfare has been at the forefront of the 2022 Russia-Ukraine war, as all sides attempt to shape online narratives. NLP could be valuable for combating manipulation, but most prior work focuses on detecting extreme manipulation strategies like fake news and relies on supervised approaches with pre-annotated data, which is unavailable in emerging crises. We release a new dataset, VoynaSlov, containing 38M+ social media posts from Russian media and their public responses. We characterize VoynaSlov along three dimensions: media ownership (state-affiliated, independent), platform (Twitter, VKontakte), and time (pre-war, during-war). Drawing from political communication, we investigate subtle manipulation strategies by applying state-of-the-art NLP models to quantify agenda-setting, framing, and priming. Our methodology includes word frequencies, topic modeling, supervised classification, and consideration of metadata such as likes or shares. Each technique reveals interesting, yet sometimes conflicting, insights; for example, independent outlets are more likely to use war-related words, but state-affiliated outlets discuss war-related topics more. Throughout the talk, we discuss numerous limitations: topic model interpretability and instability, unclear framing typologies and domain-specificity, and issues surrounding validity and misuse for priming. Our dataset, initial investigation, and discussion of open technical challenges can facilitate the identification and mitigation of information manipulation tactics in ongoing crisis situations.

Bio: Chao Ma is a Szego Assistant Professor in the Department of Mathematics at Stanford University. His research focuses on building mathematical theories for modern machine learning methods. His recent works provided understandings to the implicit regularization effect of various optimization algorithms for neural networks, by studying the interaction between the algorithms and the network structures. Before joining Stanford, Chao obtained his PhD from the Program of Applied and Computational Mathematics at Princeton University, advised by Prof. Weinan E. His thesis mathematically analyzed the approximation and generalization capacity of neural networks.

Talk Title: Implicit bias of optimization algorithms for neural networks and their effects on generalization

Talk Abstract: Modern neural networks are usually over-parameterized—the number of parameters exceeds the number of training data. In this case the loss functions tend to have many (or even infinite) global minima, which imposes an additional challenge of minima selection on optimization algorithms besides the convergence. Specifically, when training a neural network, the algorithm not only has to find a global minimum, but also needs to select minima with good generalization among many other bad ones. In this talk, I will share a series of works studying the mechanisms that facilitate global minima selection of optimization algorithms. First, with a linear stability theory, we show that stochastic gradient descent (SGD) favors flat and uniform global minima. Then, we build a theoretical connection of flatness and generalization performance based on a common structure of neural networks. Next, we study the global minima selection dynamics—the process that an optimizer leaves bad minima for good ones—in two settings. For a manifold of minima around which the loss function grows quadratically, we derive effective exploration dynamics on the manifold for SGD and Adam, using a quasistatic approach. For a manifold of minima around which the loss function grows subquadratically, we study the behavior and effective dynamics for GD, which also explains the edge of stability phenomenon.

Bio: I am a philosopher of science and a computational researcher. My research agenda is broad and advances both our theoretical and empirical understanding of how emerging technologies (particularly AI) affect the way we do science and investigates how we can both study and use computational methods to better understand the joint processes of discovery and justification. I am currently a joint PhD Candidate in the departments of Philosophy and the Committee on the Conceptual and Historical Studies of Science at the University of Chicago. I am a Fellow in the Pritzker School of Molecular Engineering AI-Enabled Molecular Engineering of Materials and Systems for Sustainability program, an Affiliated Researcher at Globus Labs, and a member of the KnowledgeLab.

Talk Title: Digital Doubles of Everything (Even You)

Talk Abstract: Scientists have long made and interrogated doubles of reality. Often developed to describe narrow aspects of otherwise immense systems, traditional models have always been relatively poor substitutes for the bewildering tangle of complexity that pervades the world. However, the rise of AI allows scientists to generate and interrogate increasingly sophisticated digitized captures of entire systems of interest. These “digital doubles” represent everything from system I/O to complete characterizations of internal mechanisms and processes. In this project, we argue that the opportunity with doubles of many such systems is not just expedient exploration or accurate prediction. Instead, it is to combine them, represent interactions between them faithfully, and even enable them to interrogate and explore one another. We probe the possibility of developing digital doubles of dynamic systems of knowledge, values, and commitments, as well as using these doubles to operationalize and automate a logic of anomaly detection and correction, not only in our data but in our theoretical and normative, non-epistemic commitments.

Bio: Gemma Moran is a postdoctoral research scientist at the Data Science Institute at Columbia University, mentored by David Blei. She received her PhD in Statistics from the University of Pennsylvania, advised by Edward George and Veronika Rockova. Her research is on probabilistic machine learning and Bayesian inference. She aims to develop interpretable and reliable methods for large-scale data that help researchers gain scientific insight and guide their decision-making.

Talk Title: Identifiable deep generative models via sparse decoding

Talk Abstract: We develop the sparse VAE for unsupervised representation learning on high-dimensional data. The sparse VAE learns a set of latent factors (representations) which summarize the associations in the observed data features. The underlying model is sparse in that each observed feature (i.e. each dimension of the data) depends on a small subset of the latent factors. As examples, in ratings data each movie is only described by a few genres; in text data each word is only applicable to a few topics; in genomics, each gene is active in only a few biological processes. We prove such sparse deep generative models are identifiable: with infinite data, the true model parameters can be learned. (In contrast, most deep generative models are not identifiable.) We empirically study the sparse VAE with both simulated and real data. We find that it recovers meaningful latent factors and has smaller heldout reconstruction error than related methods.

Bio: Harlin Lee is a Hedrick Assistant Adjunct Professor at UCLA Mathematics. She received her PhD in Electrical and Computer Engineering at Carnegie Mellon University in 2021. She also has a MS in Machine Learning from Carnegie Mellon University, and a BS + MEng in Electrical Engineering and Computer Science from MIT. Her research is on learning from high-dimensional data supported on structures such as graphs (networks), low-dimensional subspace, or sparsity, motivated by applications in healthcare and social science. Harlin’s lifelong vision is to use data theory to help everyone live physically, mentally and socially healthier.

Talk Title: Understanding scientific fields via network analysis and topic modeling

Talk Abstract: As scientific disciplines get larger and more complex, it becomes impossible for an individual researcher to be familiar with the entire body of literature. This forces them to specialize in a sub-field, and unfortunately such insulation can hinder the birth of ideas that arise from new connections, eventually slowing down scientific progress. As such, discovering fruitful interdisciplinary connections by analyzing scientific publications is an important problem in the science of science. This talk will present several past and ongoing projects towards answering that question using tools from network analysis and topic modeling: 1) a dynamic-embedding-based method for link prediction in a machine learning/AI semantic network, 2) finding communities in cognitive science that study similar topics but do not cite each other or publish in the same venues, and 3) developing theoretically grounded hypergraph embedding methods to capture surprising collaborations or missed opportunities.

Bio: I am a fourth year PhD candidate in Physics at the University of Pennsylvania, advised by Dr. Masao Sako. I work with type Ia supernovae as cosmological probes as part of the Dark Energy Survey Collaboration as well as the Nancy Grace Roman Space Telescope supernova science investigation team. I am particularly interested in applications of machine learning and other modern data science techniques to large-scale astronomical survey data.

Talk Title: A Convolutional Neural Network Approach to Supernova Classification

Talk Abstract: One of the brightest objects in the universe, supernovae are powerful explosions marking the end of a star’s lifetime. Supernova type is defined by spectroscopic emission lines, but obtaining spectroscopy is often logistically unfeasible. Thus, the ability to identify supernovae by type using time-series image data alone is crucial, especially in light of the increasing breadth and depth of upcoming telescopes. We present a convolutional neural network method for fast supernova time-series classification, with observed brightness data smoothed in both the wavelength and time directions with Gaussian process regression. We apply this method to full duration and truncated supernova time-series, to simulate retrospective as well as real-time classification performance. Retrospective classification is used to differentiate cosmologically useful Type Ia supernovae from other supernova types, and this method achieves > 99% accuracy on this task. We are also able to differentiate between 6 supernova types with 60% accuracy given only two nights of data and 98% accuracy retrospectively.

Bio: Jian Kang is a final-year Ph.D. candidate in the Department of Computer Science at the University of Illinois at Urbana-Champaign. He received his M.CS. degree from the University of Virginia in 2016 and B.Eng. degree from Beijing University of Posts and Telecommunications in 2014. His research interests lie in trustworthy learning and mining on graphs. His research works on related topics have been published at several major conferences and journals in data mining and artificial intelligence (e.g., KDD, WWW, CIKM, TKDE, TVCG). He is the recipient of Mavis Future Faculty Fellowship and three reviewer awards (ICML’20, ICLR’21, CIKM’21).

Talk Title: Algorithmic Foundation of Fair Graph Mining

Talk Abstract: Graphs are ubiquitous in many real-world applications. To date, researchers have developed a plethora of theories, algorithms and systems that are successful in answering what and who questions on graphs. Despite the remarkable progress, unfairness often occurs in many graph mining algorithms, hindering the deployment of graph mining algorithms in high-stake applications. To make graph mining process and its results fair, it is crucial to propose a paradigm shift, from answering what and who to understanding how and why. Four desired properties (i.e., utility, fairness, transparency and robustness) are called for in order to build an algorithmic foundation of fair graph mining. In this talk, I will present our efforts in building the algorithmic foundation of fair graph mining by addressing the tensions among the desired properties. First, we present a generic algorithmic framework to understand how the graph mining results relate to the input graph. Second, we systematically study the individual fairness on graph mining, including the measurement, mitigation strategies and cost. Finally, we analyze the root cause of degree-related unfairness in graph neural networks and present a family of debiasing algorithms, followed by my thoughts about the future work.

Bio: Jingyan Wang is a Ronald J. and Carol T. Beerman President’s postdoctoral fellow in the H. Milton Stewart School of Industrial and Systems Engineering at Georgia Institute of Technology. Her research interests lie in improving high-stake decision-making problems such as hiring and admissions, using tools from statistics and machine learning. She is the recipient of the Best Student Paper Award at AAMAS 2019. She received her Ph.D. from the School of Computer Science at Carnegie Mellon University in 2021, advised by Nihar Shah. She received her B.S. in Electrical Engineering and Computer Sciences with a minor in Mathematics from the University of California, Berkeley in 2015.

Talk Title: Modeling and Correcting Bias in Sequential Evaluation

Talk Abstract: We consider the problem of sequential evaluation in applications such as hiring and competitions, in which an evaluator observes candidates in a sequence and assigns scores to these candidates in an online, irrevocable fashion. Motivated by the psychology literature that has studied sequential bias in such settings — namely, dependencies between the evaluation outcome and the order in which the candidates appear — we propose a natural model for the evaluator’s rating process that captures the lack of calibration inherent to such a task. We conduct crowdsourcing experiments to demonstrate various facets of our model. We then proceed to study how to correct sequential bias under our model by posing this as a statistical inference problem. We propose a near-linear time, online algorithm for this task and prove guarantees in terms of two canonical ranking metrics. We also prove that our algorithm is information theoretically optimal, by establishing matching lower bounds in both metrics. Finally, we show that our algorithm significantly outperforms the de facto method of naively using the rankings induced by the reported scores.

Bio: Jonatas Marques is a research assistant at the Federal University of Rio Grande do Sul (UFRGS, Brazil), working on the intersection of network management and artificial intelligence. He earned a PhD (2022, with honors) in Computer Science from UFRGS, advised by Prof. Luciano Paschoal Gaspary. Jonatas also spent a year (Sep, 2019 – Aug, 2020) as a research scholar at the University of Illinois at Urbana Champaign working with Prof. Kirill Levchenko. His research helps advance network management in the programmable network era. He is the recipient of Best Paper Awards at IFIP/IEEE IM (2019) and SBC SBRC (2018).

Talk Title: Advancing Network Monitoring and Operation with In-band Telemetry and Data Plane Programmability

Talk Abstract: Modern communication networks operate under high expectations on performance and resilience (e.g., latency, bandwidth, availability) mainly due to the continuous proliferation of non-elastic highly-distributed applications. In this context, closely monitoring the state, behavior, and performance of networking devices and their traffic as well as quickly troubleshooting problems as they arise is essential for the operation of network infrastructures. In this talk, I will describe monitoring opportunities and challenges in the era of programmable data planes. I will present past and current work on advancing how we monitor networks and their traffic to improve network management practices. First, I will present IntSight, a system for a system for highly accurate and fine-grained detection and diagnosis of SLO violations based path-wise in-band telemetry. Second, I will present Euclid, a fully in-network fine-grained, low-footprint, and low-delay traffic analysis mechanism for DDoS attack detection and mitigation. Finally, I will present on-going work on exploring in-network computation for running machine learning models directly in the data plane of networks.

Bio: Laixi Shi is a Ph.D. candidate in the Department of Electrical and Computer Engineering at Carnegie Mellon University, fortunately advised by Prof. Yuejie Chi. She has also interned at Google Research Brain Team and Mitsubishi Electric Research Laboratories. Her research interests include reinforcement learning (RL), non-convex optimization, high-dimensional statistical estimation, and signal processing, ranging from theory to applications. Her current research focuses on 1) theoretical works: designing provable sample-efficient algorithms for value-based RL, offline RL, and robust RL problems, resorting to optimization and statistics; 2) practical works: reinforcement learning algorithms (DRL) on different large-scale problems such as Atari games, web navigation and etc.

Talk Title: Provable Algorithms for Reinforcement Learning: Efficiency and Robustness

Talk Abstract: Reinforcement learning (RL) has achieved remarkable success recently to deal with unknown environments and maximize some long-term cumulative rewards. Contemporary reinforcement learning has to deal with unknown environments with unprecedentedly large dimensionality. In order to fundamentally understand and improve the effectiveness of RL algorithms in high dimensions, a recent body of work sought to develop a finite-sample theoretical framework to analyze the algorithms of interest. My work belongs to this recent line of delineating the dependency of algorithm performance on salient parameters in a non-asymptotic fashion in different RL settings. Besides maximizing the expected total reward, perhaps an equally important goal—to say the least— for an RL agent is safety and robustness, especially in high-stake applications such as robotics, autonomous driving, clinical trials, financial investments, and so on. Inspired by the robustness requirement, in this talk, we introduce our work on distributional robust RL with Kullback-Leibler (KL) divergence as the distance, referring to the problem of learning an optimal policy that is robust to future environment perturbations. I will introduce our proposed robust RL algorithm, which to the best of our knowledge is the first near-optimal algorithm for this problem, which matches the minimax lower bound up to a polynomial factor of the effective horizon length.

Bio: Linyi Li is a fifth-year Ph.D. student at the Computer Science Department of University of Illinois Urbana-Champaign advised by Prof. Bo Li and Prof. Tao Xie. Linyi’s research lies in the intersection of machine learning and computer security. Recently, he focuses on building certifiably trustworthy deep learning systems at scale, achieving state-of-the-art certifiable robustness against noise perturbations, semantic perturbations, poisoning attacks, distributional shift, and state-of-the-art certifiable fairness. His research is published at top-tier deep learning and computer security conferences, including ICML, NeurIPS, ICLR, S&P, and CCS. Linyi is a recipient of AdvML Rising Star Award and Wing Kai Cheng Fellowship, and a finalist of Two Sigma PhD Fellowship and Qualcomm Innovation Fellowship. Previously, Linyi got his bachelor’s degree in computer science with distinction from Tsinghua University in 2018.

Talk Title: Enabling Large-Scale Certifiable Deep Learning towards Trustworthy Machine Learning

Talk Abstract: Given the rising societal safe and ethical concerns for modern deep learning systems in deployment, designing certifiable large-scale deep learning systems for real-world requirements is in urgent demand. This talk will introduce our series of work on building certifiable large-scale deep learning systems towards trustworthy machine learning, achieving robustness against noise perturbations, semantic transformations, poisoning attacks, distributional shifts; fairness; and reliability against numerical defects. I will also present the applications of these certifiable methods in modern deep reinforcement learning and computer vision systems. These works are recently published at machine learning and security conferences such as ICML, ICLR, NeurIPS, CCS, and S&P. Then, I will introduce the shared core backbone for designing certifiable deep learning systems, including threat-model-dependent smoothing, efficient and exact model state abstraction, statistical worst-case characterization, and diversity-enhanced model training. These backbone methodologies not only enable us to achieve the state-of-the-art certified trustworthiness under multiple existing notations and outperform existing baselines (if exists) with a large margin, but also indicate a promising direction towards achieving certified and “meta-trustworthy” ML. At the end of the talk, I will summarize several challenges in certifiable ML, such as scalability challenges, tightness challenges, deployment challenges, the gap between theory and practice, and the societal implications/impacts of certifiably trustworthy ML. The talk will be concluded with highlighted future directions for research and applications.

Bio: Lu Gan is currently a Postdoctoral Scholar at the California Institute of Technology, working with Soon-Jo Chung and Yisong Yue. She completed her Ph.D. in robotics at the University of Michigan, where she was co-advised by Ryan Eustice, Jessy Grizzle, and Maani Ghaffari. She is broadly interested in Robotics, Computer Vision, and Machine Learning. Her current research focuses on perception and navigation for robot autonomy in unstructured environments and adverse conditions. Her work has been published at premier conferences and journals in robotics such as ICRA, IROS, BMVC, IEEE RAL and IEEE TRO. More details can be found at https://ganlumomo.github.io/.

Talk Title: Semantic-Aware Robotic Mapping in Unknown, Loosely Structured Environments

Talk Abstract: Robotic mapping is the problem of inferring a representation of a robot’s surroundings using noisy measurements as it navigates through an environment. As robotic systems move toward more challenging behaviors in more complex scenarios, such systems require richer maps so that the robot understands the significance of the scene and objects within. This talk focuses on semantic-aware robotic mapping in unknown, loosely structured environments. The first part is a Bayesian kernel inference semantic mapping framework that formulates a unified probabilistic model for occupancy and semantics, and provides a closed-form solution for scalable dense semantic mapping. This framework significantly reduces the computational complexity of learning-based continuous semantic mapping and achieves high accuracy in the meantime. Next, I will present a novel and flexible multi-task multi-layer Bayesian mapping framework that provides even richer environmental information. A two-layer robotic map of semantics and traversability is built as a constructive example. Moreover, it is readily extendable to include more layers according to needs. Both mapping algorithms were verified using publicly available datasets or through experimental results on a Cassie-series bipedal robot. Finally, instead of modeling the terrain traversability using metrics defined by domain knowledge, an energy-based deep inverse reinforcement learning method that learns robot-specific traversability from demonstrations will be presented. This method considers robot proprioception and can learn reward maps that lead to more energy-efficient future trajectories. Experiments are conducted using a dataset collected by a Mini-Cheetah robot in various scenes of a campus environment.

Bio: Nwamaka Okafor is a final year PhD student at University College Dublin. She works with the Smartbog Observation Group on Irish peatlands and advised by Dr. Declan Delaney. Her work is supported by Schlumberger Faculty for the Future Fellowship (FFTF) Programme, Environmental Protection Agency (EPA) and TETFUND and focuses on the applications of IoT and machine learning in environmental monitoring. Key to her research is a means to process and efficiently harness inherently noisy data collected from IoT deployments, web/crowdsourced data and remote sensing of ecologically significant sites. Nwamaka holds an MSc in Computer Forensics and Cyber Security (Distinction) from the University of Greenwich, London. During the Covid-19 pandemic, Nwamaka collaborated with other researchers from the Universities of Oxford and Cambridge on a British Telecom’s sponsored project to explore the impact of containment measures on the rate of spread of Covid-19 in selected countries. The project won the overall best project with Real World Impact Award among 44 other projects from across the world. Nwamaka is a recipient of the 2020 Google Women Techmakers award (now Generation Google Scholarship), ACM SRC award and recently listed as one of the eighty women advancing AI in Africa and around the world by African Shapers. Her work has been published in leading journals and conferences including ICML, IEEE-Sensors. Upon completion of her PhD, Nwamaka will join Argonne National Laboratory, IL, USA as a Postdoctoral appointee and would focus on developing novel methods to analyse supercomputer logs, including Aurora and Polaris.

Talk Title: Efficient data processing pipeline for IoT sensor devices in environmental monitoring networks.

Talk Abstract: Low-cost IoT sensors (LCS) have the capacity to provide high resolution spatio-temporal dataset of key variables in environmental monitoring networks. The use of LCS in environmental monitoring, however, continues to raise important questions, especially pertaining to the reliability, field performance and data quality of the sensors. IoT technologies are at the early stage of development in many application areas and as such they are still challenged by a number of issues including data handling and framework. There is currently no universal framework and/or formalized architecture in existence for the application of IoT sensor devices in environmental monitoring and no complete tool has been developed for data handling and analysis.

Developing effective data processing pipeline for IoT sensor data is essential for the adoption and application of LCS in environmental monitoring. In this talk, I will discuss our research efforts aimed at improving field performance and data quality of LCS devices in environmental monitoring networks, through the development of reliable data processing and error correction techniques such as automatic sensor calibration, data fusion, data imputation, outlier detection and effective feature selection.

I will discuss how the capabilities of AI/ML can be leveraged to support the effective application of IoT in ecological sensing. In particular, how these technologies can: 1.) support the operations of LCS in data acquisition models to capture large scale and longitudinal observations, 2.) support the development of specific data handling and Data Quality Improvement (DQI) techniques for LCS, and 3.) serve as effective strategies to support on-site sensor calibration.

Bio: Oscar Leong is a von Karman Instructor in the Computing and Mathematical Sciences department at Caltech, hosted by Venkat Chandrasekaran. He also works with Katie Bouman and the Computational Cameras group. He received his PhD in 2021 in Computational and Applied Mathematics from Rice University under the supervision of Paul Hand, where he was an NSF Graduate Fellow. His research interests lie in the mathematics of data science, optimization, inverse problems, and machine learning. The core focus of his doctoral work was on proving recovery theorems for ill-posed inverse problems using generative models. He is broadly interested in using tools from convex geometry, high-dimensional statistics, and nonlinear optimization to better understand and improve data-driven, decision-making algorithms.

Talk Title: The power and limitations of convex regularization

Talk Abstract: The incorporation of regularization functionals to promote structure for inverse problems has had a long history in applied mathematics, with a recent surge of interest in the use of both convex and nonconvex functionals. The structure of such functionals largely lies in computational and modeling considerations, but there is a lack of an overarching understanding of the power and limitations of enforcing convexity versus nonconvexity and when one should be used over another for a given data distribution. In this presentation, we propose to tackle this question from a convex geometric perspective and ask the following question: for a given data source, what is the optimal regularizer induced by a convex body? To answer this, a variational optimization problem over the space of convex bodies is proposed to search for the optimal regularizer. We analyze the structure of population risk minimizers by connecting minimization of the population objective to Minkowski’s inequality for convex bodies. These results are also shown to be robust, as we establish convergence of empirical risk minimizers to the population risk minimizer in the limit of infinite data. Based on this characterization of optimal convex regularizers, we then consider whether convexity is the right structure for certain distributions and use our theory to characterize when convex or nonconvex structure should be preferred.

Bio: Ruishan Liu is a postdoctoral researcher in the Department of Biomedical Data Science at Stanford University, working with Prof. James Zou. She received her PhD in the Department of Electrical Engineering at Stanford University in 2022. She is broadly interested in the intersection of machine learning and applications in human diseases, health and genomics. The results of her work have been published in top-tier venues such as Nature, Nature Medicine and ICLR. She was the recipient of Stanford Graduate Fellowship and was selected as the rising star in engineering in health by Johns Hopkins University and Columbia University in 2022. She led the project Trial Pathfinder, which was selected as 2021 Top Ten Clinical Research Achievement and Finalist for Global Pharma Award 2021.

Talk Title: AI for clinical trials and precision medicine

Talk Abstract: Clinical trials are the gate-keeper of medicine but can be very costly and lengthy to conduct. Precision medicine transforms healthcare but is limited by available clinical knowledge. This talk explores how AI can help both — make clinical trials more efficient and generate hypotheses for precision medicine. I will first discuss Trial Pathfinder, a computational framework that simulates synthetic patient cohorts from medical records to optimize cancer trial designs (Liu et al. Nature 2021). Trial Pathfinder enables inclusive criteria and data valuation for clinical trials, benefiting diverse patients and trial sponsors. In the second part, I will discuss how to quantify the effectiveness of cancer therapies in patients with specific mutations (Liu et al. Nature Medicine 2022). This work demonstrates how computational analysis of large real-world data generates insights, hypotheses and resources to enable precision oncology.

Bio: Ryan Shi is a final-year Ph.D. candidate of Societal Computing in the School of Computer Science at Carnegie Mellon University. He works with nonprofit organizations to address societal challenges in food security, sustainability, and public health using AI. His research has been deployed at these organizations worldwide. Shi studies game theory, online learning, and reinforcement learning problems motivated by nonprofit applications. He is the recipient of a Siebel Scholar award, an IEEE Computer Society Upsilon Pi Epsilon Scholarship, and a Carnegie Mellon Presidential Fellowship. Shi grew up in Henan, China before moving to the U.S., where he graduated from Swarthmore College with a B.A. in mathematics and computer science.

Talk Title: Towards the Science of AI for Nonprofits

Talk Abstract: I work on AI for nonprofits, with nonprofits, so that it could be used by nonprofits. This talk is about delineating AI for nonprofits as a research discipline of its own. I will start with a 4-year collaboration with a food rescue organization. We developed a series of machine learning-based algorithms to help the organization engage with food rescue volunteers. Our tools have been deployed since February 2020. Our randomized controlled trial showed that the algorithm not only improved the accuracy of engagement, but also made it significantly easier for rescues to get claimed. We are rolling it out to over 15 cities across the US. Motivated by the pain points we experienced in this and other works, we proposed bandit data-driven optimization, a new learning paradigm that integrates online bandit learning and offline predictive models to address the unique challenges that arise in data science projects for nonprofits. We prove theoretical guarantees for our algorithm and show that it achieves superior performance on simulated and real datasets. I will conclude the talk with an inquiry of the state of AI for nonprofits as its own research discipline. Using another project where we developed and deployed an NLP tool for with a conservation nonprofit as a primer, I argue that AI for nonprofits needs its own set of research questions that will systematically guide future projects. I will share a few of these directions that I am currently working on and plan to work on in the near future.

Bio: Tian Li is a fifth-year Ph.D. student in the Computer Science Department at Carnegie Mellon University working with Virginia Smith. Her research interests are in distributed optimization, large-scale machine learning, federated learning, and data-intensive systems. Prior to CMU, she received her undergraduate degrees in Computer Science and Economics from Peking University. She was a research intern at Google Research in 2022. She received the Best Paper Award at ICLR Workshop on Security and Safety in Machine Learning Systems (2021), was selected as Rising Stars in Machine Learning (2021), and was invited to participate in EECS Rising Stars Workshop (2022).

Talk Title: Scalable and Trustworthy Learning in Heterogeneous Networks

Talk Abstract: Federated learning stands to power next-generation machine learning applications by aggregating a wealth of knowledge from distributed data sources. However, federated networks introduce a number of challenges beyond traditional distributed learning scenarios. In addition to being accurate, federated methods must scale to potentially massive and heterogeneous networks of devices, and must exhibit trustworthy behavior—addressing pragmatic concerns related to issues such as fairness, robustness, and user privacy. In this talk, I talk about how heterogeneity lies at the center of the constraints of federated learning—not only affecting the accuracy of the models, but also competing with other critical metrics such as fairness, robustness, and privacy. To address these metrics, I talk about new, scalable federated learning objectives and algorithms that rigorously account for and address sources of heterogeneity. Although our work is grounded by the application of federated learning, I show that many of the techniques and fundamental tradeoffs extend well beyond this use-case.

Bio: Valentin is a final-year PhD student at the University of Oxford and a research assistant at LMU Munich. His work broadly focuses on the intersection of NLP, linguistics, and computational social science, with specific interests in tokenization algorithms, socially and temporally aware NLP systems, and computational models of political ideology. The findings of his research have been published at major NLP and machine learning conferences such as ACL, EMNLP, NAACL, ICWSM, and ICML. During his PhD, Valentin also spent time as a research intern in DeepMind’s Language Team and as a visiting scholar in Stanford’s NLP Group.

Talk Title: Modeling Ideological Language with Graph Neural Networks and Structured Sparsity

Talk Abstract: The increasing polarization of online political discourse calls for computational tools that automatically detect and monitor ideological divides in social media. While many methods to track ideological polarization have been proposed, most of them rely on knowing in advance the political orientation of text, a requirement seldom met in practice. In this talk, I will discuss research recently published at NAACL and ICML that fully dispenses with the need for labeled data and instead leverages the ubiquitous network structure of online discussion forums, specifically Reddit, to detect ideological polarization. I will first give an overview of prior research on polarization in natural language processing, highlighting salience and framing as two key mechanisms by which ideology manifests itself in language. I will then present Slap4slip, a method that combines graph neural networks with structured sparsity learning to determine the polarization of political issues (e.g., abortion) along the dimensions of salience and framing. In the third part of the talk, I will show that polarization is also reflected by the existence of an ideological subspace in contextualized embeddings, which can be found by adding orthogonality regularization to Slap4slip. The ideological subspace encodes abstract evaluative semantics and indicates pronounced changes in the political left-right spectrum during the presidency of Donald Trump.

Bio: Vishwali Mhasawade is a Ph.D. candidate in Computer Science at New York University, advised by Prof. Rumi Chunara. Her research is supported by the Google Fellowship in Health. She focuses on designing fair and equitable machine learning systems for mitigating health disparities and developing methods in causal inference and algorithmic fairness. Vishwali was an intern at Fiddler AI Labs and Spotify Research. She has been involved in mentoring roles involving high school students through the NYU ARISE program, reviewer mentoring through the Machine Learning for Health initiative, and career mentoring for Ph.D. applicants through the Women in Machine Learning program.

Talk Title: Advancing Health Equity with Machine Learning

Talk Abstract: While a patient visits the hospital for treatment, factors outside the hospital, such as where the individual resides and what educational and vocational opportunities are present, play a vital role in the patient’s health trajectory. On the contrary, most advances in machine learning in healthcare are mainly restricted to data within hospitals and clinics. While health equity, defined as minimizing avoidable disparities in health and its determinants between groups of people with different social privileges in terms of power, wealth, and prestige, is the primary principle underlying public health research, this has been largely ignored by the current machine learning systems. Inequality at the social level is harmful to the population as a whole. Thus, a focus on the factors related to health outside the hospital is imperative to not only address specific challenges for high-risk individuals but also to determine what policies will benefit the community as a whole. In this talk, I will first demonstrate the challenges of mitigating health disparities resulting from the different representations of demographic groups based on attributes like gender and self-reported race. I will focus on machine learning systems using person-generated data and in-hospital data from multiple geographical locations worldwide. Next, I will present a causal remedial approach to health inequity using algorithmic fairness that reduces health disparities. In the end, I will discuss how algorithmic fairness can be leveraged to achieve health equity by incorporating social factors and illustrate how residual disparities persist if social factors are ignored.

Bio: I am a joint NIST-IMA Postdoctoral fellow in Analysis of Machine Learning at the Institute for Mathematics and its Applications (IMA) at the University of Minnesota (UMN). I completed my Ph.D. at the University of California, Los Angeles in mathematics in 2022 under the guidance of Professor Stan Osher. Throughout my Ph.D., I have developed optimal transport-based algorithms to solve nonlinear partial differential equations (PDEs) such as Darcy’s law, tumor growth model, and mean field games. As a Postdoc, I will be working with Professor Jeff Calder, Gilad Lerman, and Li Wang at UMN to develop PDE-based algorithms to solve high-dimensional machine learning problems and analyze the theoretical properties of the algorithms.

Talk Title: The Back-And-Forth Method For Wasserstein Gradient Flows

Talk Abstract: We present a method to efficiently compute Wasserstein gradient flows. Our approach is based on a generalization of the back-and-forth method (BFM) introduced by Jacobs and Leger to solve optimal transport problems. We evolve the gradient flow by solving the dual problem to the JKO scheme. In general, the dual problem is much better behaved than the primal problem. This allows us to efficiently run large scale gradient flows simulations for a large class of internal energies including singular and non-convex energies. Joint work with Matt Jacobs (Purdue University) and Flavien Leger (INRIA Paris).

Bio: Xintong Wang is a postdoctoral fellow in the Harvard EconCS group hosted by David Parkes. She received her Ph.D. in April 2021 from the CSE Department at the University of Michigan, advised by Michael Wellman. Her research is centered around developing computational approaches to model complex agent behaviors and to design better market-based algorithmic systems, drawing on tools from machine learning, game theory, and optimization, with applications ranging from platform economies to financial systems. During her Ph.D., she worked as a research intern at Microsoft Research NYC (mentored by David Pennock) and J.P. Morgan AI Research (mentored by Tucker Balch). Prior to Michigan, she received her Bachelor’s degree from Washington University in St. Louis in 2015.

Talk Title: Market Manipulation: An Adversarial Learning Framework for Detection and Evasion

Talk Abstract: I will talk about our recently proposed adversarial learning framework that captures the evolving game between a regulator who develops tools to detect market manipulation and a manipulator who obfuscates actions to evade detection. The model includes three main parts: (1) a generator that learns to adapt a sequence of known manipulation activities to resemble patterns of a normal (benign) trading agent while preserving the manipulation intent; (2) a discriminator that differentiates the adversarially adapted manipulation actions from normal trading activities; and (3) an agent-based model that provides feedback in regard to the manipulation effectiveness and profitability of adapted outputs. We conduct experiments on simulated trading actions associated with a manipulator and a benign agent respectively. We show examples of adapted manipulation order streams that mimic the specified benign trading patterns and appear qualitatively different from the original manipulation strategy we encoded in the simulator. These results demonstrate the possibility of automatically generating a diverse set of (unseen) manipulation strategies that can facilitate the training of more robust detection algorithms.

Bio: I am a postdoc hosted by the Department of Statistics at the University of Chicago. Prior to this, I obtained my Ph.D. in Statistics at the University of Washington, advised by Fang Han and Daniela Witten. I am broadly interested in nonparametric and semiparametric statistics, high dimensional inference, and applied probability.

Talk Title: Universality of regularized regression estimators in high dimensions

Talk Abstract: The Convex Gaussian Min-Max Theorem (CGMT) has emerged as a prominent theoretical tool for analyzing the precise stochastic behavior of various statistical estimators in the so-called high dimensional proportional regime, where the sample size and the signal dimension are of the same order. However, a well recognized limitation of the existing CGMT machinery rests in its stringent requirement on the exact Gaussianity of the design matrix, therefore rendering the obtained precise high dimensional asymptotics largely a specific Gaussian theory in various important statistical models. This work provides a structural universality framework for a broad class of regularized regression estimators that is particularly compatible with the CGMT machinery. In particular, we show that with a good enough $\ell_\infty$ bound for the regression estimator $\hat{\mu}_A$, any `structural property’ that can be detected via the CGMT for $\hat{\mu}_G$ (under a standard Gaussian design G) also holds for $\hat{\mu}_A$ under a general design A with independent entries. As a proof of concept, we demonstrate our new universality framework in three key examples of regularized regression estimators: the Ridge, Lasso and regularized robust regression estimators, where new universality properties of risk asymptotics and/or distributions of regression estimators and other related quantities are proved. As a major statistical implication of the Lasso universality results, we validate inference procedures using the degrees-of-freedom adjusted debiased Lasso under general design and error distributions. We also provide a counterexample, showing that universality properties for regularized regression estimators do not extend to general isotropic designs.

Bio: Denny Wu is a Ph.D. candidate at the University of Toronto and the Vector Institute for Artificial Intelligence, under the supervision of Jimmy Ba and Murat A. Erdogdu. He also maintains close collaboration with the University of Tokyo and RIKEN AIP, where he is currently working with Taiji Suzuki and Atsushi Nitanda. Denny is interested in developing a theoretical understanding (e.g., optimization and generalization) of modern machine learning systems, especially overparameterized models such as neural networks, using tools from high-dimensional statistics.

Talk Title: How neural network learns representation: a random matrix theory perspective

Talk Abstract: Random matrix theory (RMT) provides powerful tools to characterize the performance of random neural networks in high dimensions. However, it is not clear if similar tools can be applied to trained neural network where the parameters are no longer i.i.d. due to gradient-based learning. In this work we use RMT to precisely quantify the benefit of feature learning in the “early phase” of gradient descent training. We study the first gradient step under the MSE loss on the first-layer weights W in a two-layer neural network. In the proportional asymptotic limit and an idealized student-teacher setting, we show that the gradient update contains a rank-1 “spike”, which results in an alignment between the weights and the linear component of the teacher model f*. To understand the impact of this alignment, we compute the asymptotic prediction risk of ridge regression on the conjugate kernel after one feature learning step with learning rate \eta. We consider two scalings of \eta. For small \eta, we establish a Gaussian equivalence property for the trained features, and prove that the learned kernel improves upon the initial random features, but cannot defeat the best linear model. Whereas for sufficiently large \eta, we prove that for certain f*, the same ridge estimator goes beyond this “linear regime” and outperforms a wide range of (fixed) kernels. Our results demonstrate that even one gradient step can lead to considerable advantage over random features, and highlight the role of learning rate scaling in the initial phase of training.

Bio: Yiping Lu is a fourth-year Ph.D. student at ICME Stanford, working with Lexing Ying and Jose Blanchet. Before that, he got his Bachelor’s degree in computational mathematics and information science at Peking University. His research interest lies in Non-parametric Statistics, Inverse Problems, Stochastic Modeling, and Econometrics. His research aims to design algorithms that combine data-driven techniques with (differential equation/structural) modeling and provide corresponding statistical and computational guarantees. Yiping’s research is supported by the Stanford Interdisciplinary Graduate Fellowship.

Talk Title: Machine Learning for Differential equations: Statistics, Optimization and MultiLevel Learning

Talk Abstract: In this talk, we aim to study the statistical limits and optimal algorithms to solve Elliptic PDEs from random samples. In the first part of my talk, I’ll study the statistical and optimization property of learning the Elliptic inverse problem using the Deep Ritz Method (DRM) and Physics Informed Neural Networks (PINNs). We provided a minimax lower bound for this problem and discovered that the variance in DRM leads sample complexity being suboptimal. Based on this observation, we proposed a modification of DRM with optimal sample complexity. Then I’ll introduce the optimization property of a class of Sobolev norms as the objective function. Although early stopped gradient descent for all the objective functions is statistically optimal, I observed that using the Sobolev norm can accelerate training. Combining the information from the two papers, we can conclude that DRM is fast for the high dimensional smoothing function due to the low computational cost of each iteration. But for low dimensional rough functions, we should use PINN. In the second part of my talk, I’ll talk about the statistical limit of learning linear operator learning, where both the input and output space are infinite-dimensional. We showed that proper bias and variance trade-offs could lead to optimal learning rates. The optimal learning rate is different from learning finite-dimensional linear operators. We also illustrate how this theory can inspire a multilevel machine learning algorithm. Our theory has wide application in learning differential operators and generative modeling.

Bio: Yiqun Chen is a Stanford Data Science Postdoctoral Fellow hosted by Professor James Zou. His research focuses on quantifying, calibrating, and communicating the uncertainty in modern data analysis, with applications to biomedical and health data. Previously, Yiqun received his Ph.D. in Biostatistics from the University of Washington under the supervision of Professor Daniela Witten. He completed undergraduate degrees in Statistics, Computer Science, and Chemical Biology at the University of California Berkeley. Yiqun is the recipient of a young investigator award at CROI 2020, a best paper award at WNAR 2021, a student research award at NESS 2022, a University of Washington Outstanding Teaching Assistant Award, and the Thomas R. Fleming Excellence in Biostatistics Award.

Talk Title: Selective inference for k-means clustering

Talk Abstract: We consider the problem of testing for a difference in means between clusters of observations identified via k-means clustering. In this setting, classical hypothesis tests lead to an inflated Type I error rate. To overcome this problem, we take a selective inference approach. We propose a finite-sample p-value that controls the selective Type I error for a test of the difference in means between a pair of clusters obtained using k-means clustering, and show that it can be efficiently computed. We apply our proposal in simulation, and on hand-written digits data and single-cell RNA-sequencing data.

Bio: Yuntian Deng is a PhD student at Harvard University advised by Prof. Alexander Rush and Prof. Stuart Shieber. Yuntian’s research aims at building coherent, transparent, and efficient long-form text generation models. Yuntian is also the main contributor to open-source projects such as OpenNMT, Im2LaTeX, and LaTeX2Im that are widely used in academia and industry. He has been awarded Nvidia Fellowship, Baidu Fellowship, ACL 2017 Best Demo Paper Runner-Up, DAC 2020 Best Paper, Harvard Certificate of Distinction in Teaching, NeurIPS 2020 top 10% reviewer for his research, teaching, and professional services.

Talk Title: Model Criticism for Long-Form Text Generation

Talk Abstract: Language models have demonstrated the ability to generate highly fluent text; however, it remains unclear whether their output retains coherent high-level structure (e.g., story progression). Here, we propose to apply a statistical tool, model criticism in latent space, to evaluate the high-level structure of the generated text. Model criticism compares the distributions between real and generated data in a latent space obtained according to an assumptive generative process. Different generative processes identify specific failure modes of the underlying model. We perform experiments on three representative aspects of high-level discourse—coherence, coreference, and topicality—and find that transformer-based language models are able to capture topical structures but have a harder time maintaining structural coherence or modeling coreference structures.

Bio: She is a postdoctoral researcher in the Statistics Department at the University of Chicago, advised by Professor Rina Foygel Barber. Before joining the University of Chicago, she obtained her Ph.D. in Statistics from Stanford University, advised by Professor Emmanuel Candès.

Talk Title: Derandomized knockoffs: leveraging e-values for false discovery rate control

Talk Abstract: Model-X knockoffs is a flexible wrapper method for high-dimensional regression algorithms, which provides guaranteed control of the false discovery rate (FDR). Due to the randomness inherent to the method, different runs of model-X knockoffs on the same dataset often result in different sets of selected variables, which is undesirable in practice. In this paper, we introduce a methodology for derandomizing model-X knockoffs with provable FDR control. The key insight of our proposed method lies in the discovery that the knockoffs procedure is in essence an e-BH procedure. We make use of this connection, and derandomize model-X knockoffs by aggregating the e-values resulting from multiple knockoff realizations. We prove that the derandomized procedure controls the FDR at the desired level, without any additional conditions (in contrast, previously proposed methods for derandomization are not able to guarantee FDR control). The proposed method is evaluated with numerical experiments, where we find that the derandomized procedure achieves comparable power and dramatically decreased selection variability when compared with model-X knockoffs.

Bio: Zichao (Jack) Wang is a final-year Ph.D. student in electrical and computer engineering at Rice University, advised by Prof. Richard Baraniuk. Previously, he obtained M.S. (2020) and B.S. (2016), both in electrical and computer engineering at Rice University. His research focuses on developing artificial intelligence, machine learning, and natural language processing methods with applications in education and human learning. He has been a research intern with Microsoft Research, NVIDIA Research, and Google AI.

Talk Title: Machine learning for human learning

Talk Abstract: Despite the recent advances in artificial intelligence and machine learning, we have yet to witness the transformative breakthroughs that they can bring to education, and more broadly, to how humans learn. In this talk, I will introduce our two recent research directions in developing AI/ML methods to enable more personalized learning experiences on a large scale. First, I will describe generative modeling and representation learning techniques to enable adaptive learning materials, such as assessment questions and scientific equations, that are personalized to each learner. Second, I will describe a framework for analyzing learners’ open-ended solutions to assessment questions such as code submissions in computer science education. I will also discuss my vision for AI/ML methods for education and human learning, highlighting the fundamental technical challenges, my preliminary attempts, and their potential real-world impacts.