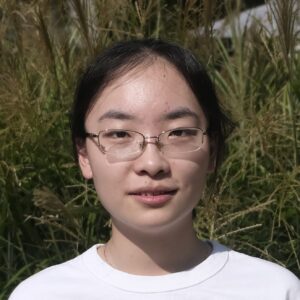

Yuqing Wang

Bio: Yuqing Wang is a Ph.D. candidate in Mathematics at Georgia Institute of Technology, advised by Prof. Molei Tao. Her research focuses on the intersection of machine learning, optimization, sampling, (stochastic) dynamics, and computational math. Her interest lies in quantitatively understanding and improving the dynamics in machine learning, including the implicit bias of large learning rate and the acceleration of sampling dynamics. Before coming to Georgia Tech, Yuqing Wang received her B.S. in Computational Mathematics from Nankai University.

Talk Title: What creates edge of stability, balancing, and catapult

Abstract: When large learning rate is used in gradient descent for nonconvex optimization, various phenomena that are not explainable by classical optimization theory can arise, including edge of stability, balancing, and catapult. There are a lot of theoretical works trying to analyze these phenomena, while the high level idea is still missing: it is unclear when and why these phenomena occur. In this talk, I will show that these phenomena are actually various tips of the same iceberg. They occur when the objective function of optimization has some good regularity. This regularity, together with the effect of large learning rate on guiding gradient descent from sharp regions to flatter ones, leads to the control of the largest eigenvalue of Hessian, i.e., sharpness, along the GD trajectory, which results in various phenomena. This result is based on the nontrivial convergence analysis under large learning rate on a family of nonconvex functions of various regularities without Lipschitz gradient which is usually a default assumption in nonconvex optimization. In addition, it contains the first non-asymptotic result on the rate of convergence in this circumstance. Neural network experiments will also be presented to validate this result.