UChicago AI Summit Examines Promise and Concerns for Science and Society

Artificial intelligence (AI) may soon become the kind of generational technology that impacts all areas of society. In these early stages, it’s critical for the conversation to include a broad range of experts – not just the computer scientists creating AI approaches, but social scientists, artists, policy experts, historians, and philosophers – to discuss both its potential and risks.

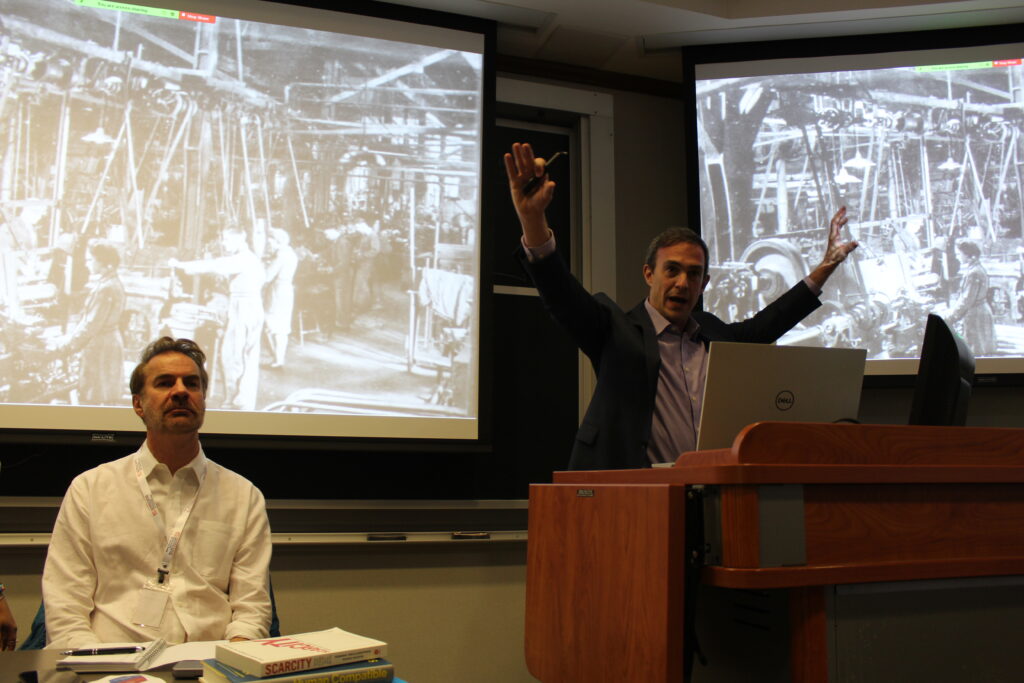

Such a convening was organized in October by the Institute on the Formation of Knowledge (IFK) in partnership with the Institute for Mathematical and Statistical Innovation, Knowledge Lab, and the Sentience Institute. The three-day “Summit on AI in Society” capped a three-year IFK research theme on artificial intelligence with panels, talks, and a keynote by acclaimed science-fiction author Ted Chiang.

To begin the summit, Shadi Bartsch-Zimmer, Director of the Institute on the Formation of Knowledge and Helen A. Regenstein Distinguished Service Professor of Classics and the Program in Gender Studies, acknowledged how much the field of AI had progressed since their AI research theme began in 2020.

“These changes illustrate the unique promise but also the perils of AI technology, as it permeates our society, our body and our planet,” Bartsch-Zimmer said. “This event is intended to analyze and reflect on the history of AI to date, to collaboratively debate and understand its future and see what actions we can take as scholars and agents in the world to ensure that these new developments go as well as possible.”

In his keynote and a subsequent discussion with James Evans, Professor of Sociology, Director of Knowledge Lab and member of the DSI research advisory committee, Chiang focused on his concerns that unregulated AI applications could exacerbate wealth disparities, worker exploitation, and other economic and social injustices. “Is there a way for AI to do something other than sharpen the knife blade of capitalism?,” he asked.

“If AI is going to be more beneficial for the future of humanity than inventing new kinds of snack food, then it’s time to do more than solve the problem of how a task ordinarily performed by humans could be performed by a machine,” Chiang concluded. “It’s time to take a step back and think about whose interests are served by such a solution, and whether solving that problem is actually helping to bring about a better world.”

Subsequent panels such as “The Trajectory of AI,” “The 4th Industrial Revolution,” and “AI and the Scientific Method” examined this ambitious vision and the possible dangers along the path. These discussions around AI are not new. As Melanie Mitchell of the Santa Fe Institute described, AI has been locked in cycles of hype and disappointment (“AI winter”) for the last 75 years. Achievements such as AlphaZero and Watson were touted as important steps towards recreating human abilities and intelligence, yet proved limited outside of their original use cases for winning Go or Jeopardy.

But Rebecca Willett, Professor of Statistics and Computer Science at the University of Chicago and Faculty Director of AI at the Data Science Institute, suggested that physics-based machine learning could push past these barriers to accelerate new discoveries in scientific fields. The theme was reiterated throughout the summit by Professors Sendhil Mullainathan, Juan de Pablo and Ross King, inventor of the Robot Scientists, who featured the potential for new discoveries and new pathways to discovery enabled by AI in a later panel on AI and the Scientific Method. Stuart Russell, Professor of Computer Science at UC Berkeley, put his wager on probabilistic programming, suggesting that the goal of artificial general intelligence may be attainable in his children’s lifetimes. But if that’s the case, now is the time to start building in protections, Russell said.

“We need to change the way we think about the nature of AI as a field. I think it needs to be much more like aviation or nuclear energy where it’s built on layers of well-understood, mathematically-rigorous, and physically-tested technologies with regulation,” Russell said. “We can’t afford a Chernobyl with super intelligent AI systems.”

In the shorter-term, excitement and debate will sprout around new applications such as the recent release of DALL-E 2, an AI system that can generate realistic images from a text description. A panel moderated by UChicago Associate Professor of Visual Arts Jason Salavon, discussed “using computation to explore the nature of creativity” and whether artists and writers should consider these systems a boon or a threat. Alexei Efros of Berkeley focused on the positives, comparing AI tools to the relationship of cinema to theater and photography to painting – innovations that didn’t replace a field, but instead created new ones.

“[AI] is just another brush, it’s a watercolor. It has a little bit of a mind of its own, like watercolor does, but it’s a tool that I’m using,” Efros said. “From the point of view of AI as creative tools, I think we have nothing to fear.”

Other fields are increasing the use of AI as a decision-making tool for self-driving vehicles, medical diagnosis, or policing strategy. In “Trusted and Trustworthy AI,” a panel of scientists from University of Chicago Computer Science and the Toyota Technological Institute at Chicago, asked whether we can understand why and how AI systems make decisions, particularly for consequential applications in medicine, transportation, and policy.

UChicago Assistant Professor of Computer Science and Data Science Chenhao Tan emphasized the importance of human-AI communication for interpretability, enabling experts to inspect, control, or even contest the model in its conclusions. Ben Zhao, Neubauer Professor of Computer Science at UChicago and security expert, asked whether AI is even deserving of trust in a world where the “cat-and-mouse loop” of defense and attack compromises most systems within months.

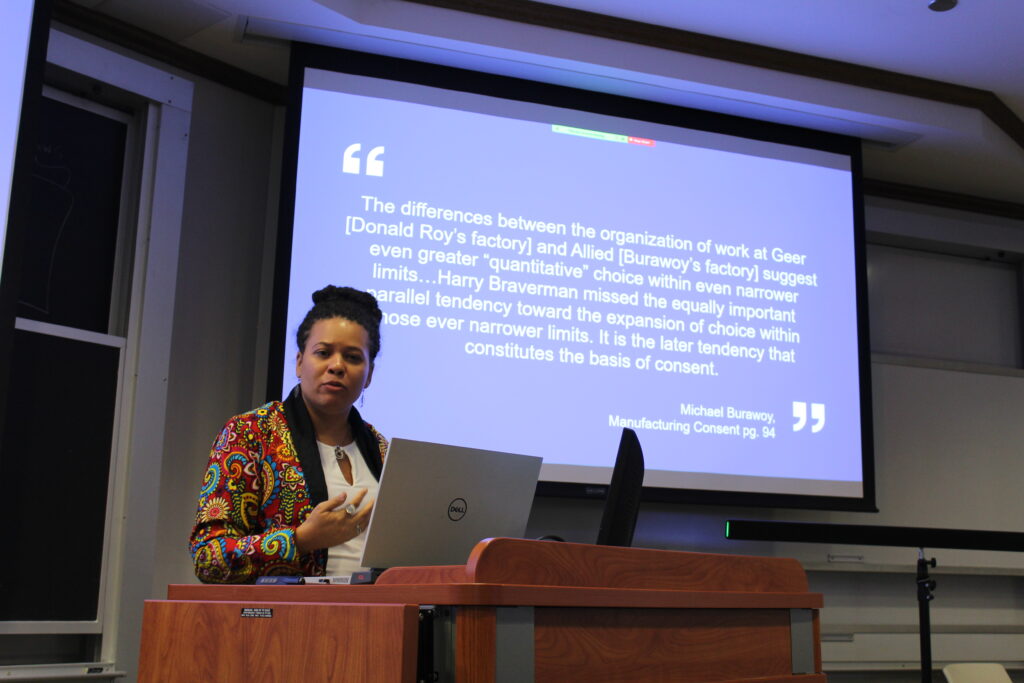

A panel on the interaction between AI and the Industrial Revolution featured UChicago researcher Pedro Lopesand social scientists Erik Brynholffson from the Stanford Institute on the Digital Economy and Avi Goldfarb from the Creative Destruction Lab at the University of Toronto. The panelists explored the potential for complementary AI to augment, rather than replace, human capacity and generate novel opportunities for individual and societal prosperity. Lindsey Cameron from the Wharton School at the University of Pennsylvania tempered this enthusiasm by raising concerns about its current impact on workers in entraining them up in a thicket of micro-incentives, based on her own fieldwork as an Uber Driver in Chicago.

Panelists in the “AI and Nonhumans” session grappled with the philosophical and ethical implications of the technology, drawing from Aristotle and Descarte to ponder whether these systems should hold the rights of “personhood.” Brian Christian, author of books such as The Alignment Problem, said the AI question is only the latest chapter in thousands of years of debate.

“Determining who or what is sentient is, in some sense, the foundation of almost any moral code,” Christian said. “Despite the utterly central place that it has in our ethics, this is still rather mysterious, and yet we understand it a lot better than we did a generation ago, which I think is thrilling. So let us then welcome each incremental development both in computer science and in neuroscience as an invitation to reckon with how little we know about the ultimate mystery at the center of both the physical and the moral universe.”

The Summit on AI was funded by the Institute for Mathematical and Statistical Innovation and hosted at the Stevanovich Center for Financial Mathematics. Photos by Jacy Reese Anthis.