Inderjit S. Dhillon (The University of Texas at Austin) – MatFormer: Nested Transformer for Elastic Inference

Part of the Spring 2024 Distinguished Speaker Series and the CAM Colloquium.

Large Language Models are powered by the underlying transformer deep learning architecture. These models are deployed in a wide range of settings, from multiaccelerator clusters to standalone mobile phones. The diverse memory and computational constraints in these scenarios necessitate practitioners to train models of various sizes to cater to each constraint. Training each of these models is computationally expensive, while also requiring maintaining each of them separately. Moreover, this limits more fine-grained control over relevant tradeoffs, including latency, cost, and accuracy. In this talk, I will introduce our recent work in this direction, “MatFormer”, which stands for “Matroyshka” Transformers. This transformer architecture encapsulates information in a nested manner, facilitating the extraction of subnetworks tailored to specific constraints. I will discuss the training methodology, the advantages over existing methods, and results across different model classes (decoders & encoders), modalities (language & vision), and scales (up to 2.6 billion parameters). Finally, I will outline our future directions to enhance efficiency and scalability for the training and deployment of such large models.

Bio: Inderjit Dhillon is the Gottesman Family Centennial Professor of Computer Science and Mathematics at UT Austin, where he is also the Director of the ICES Center for Big Data Analytics. Currently he is on leave from UT Austin and is a Distinguished Scientist at Google. Prior to that, he was Vice President and Distinguished Scientist at Amazon, and headed the Amazon Research Lab in Berkeley, California, where he and his team developed and deployed state-of-the-art machine learning methods for Amazon Search. His main research interests are in machine learning, big data, deep learning, network analysis, linear algebra and optimization. He received his B.Tech. degree from IIT Bombay, and Ph.D. from UC Berkeley. Inderjit has received several awards, including the ICES Distinguished Research Award, the SIAM Outstanding Paper Prize, the Moncrief Grand Challenge Award, the SIAM Linear Algebra Prize, the University Research Excellence Award, and the NSF Career Award. He has published over 200 journal and conference papers, and has served on the Editorial Board of the Journal of Machine Learning Research, the IEEE Transactions of Pattern Analysis and Machine Intelligence, Foundations and Trends in Machine Learning and the SIAM Journal for Matrix Analysis and Applications. Inderjit is an ACM Fellow, an IEEE Fellow, a SIAM Fellow, an AAAS Fellow and a AAAI Fellow.

Agenda

Friday, April 5, 2024

Lunch

Lunch will be provided on a first come, first serve basis.

Talk and Q&A

Navigating the Data Science Job Market: Insights and Opportunities

Brandon Stewart (Princeton University) – Getting Inference Right with LLM Annotations in the Social Sciences

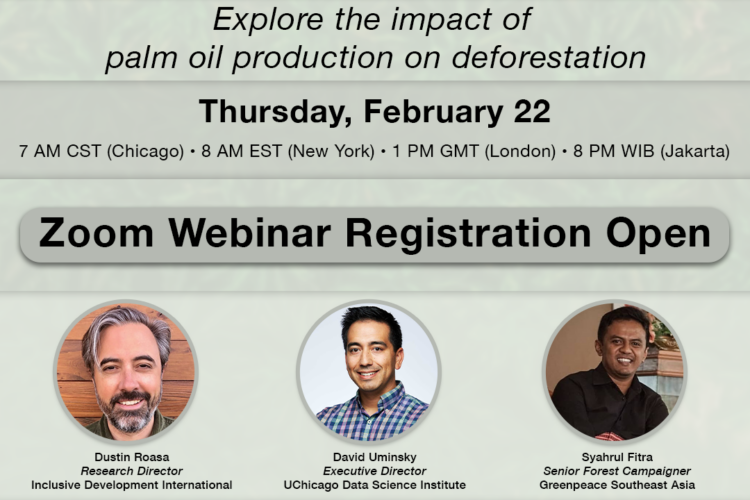

Introducing PalmWatch: Mapping the impact of big brands’ palm oil use